On the 23rd December 2025, MiniMax presented MiniMax M2.1, its most sophisticated large-language model open source (LLM) ever, explicitly built to be used in real-world programming and workflows. It is geared towards the needs of developers, AI teams, and companies looking for a cost-effective, powerful alternative to expensive proprietary models. M2.1 delivers state-of-the-art performance in multilingual tasks, coding, and complex agent environments. The new version builds significantly on its predecessor, increasing capabilities as well as expanding the scope of the range of applications.

What is MiniMax M2.1?

MiniMax M2.1 is an open-source large-language model created by MiniMax designed for the assistance of coding, agentic tools use, as well as multilingual software engineering, and Automation of real-world tasks. Similar to M2, M2.1 is a successor to M2. M2.1 uses a Mixture-of-Experts (MoE) design that has an estimated 300 billion parameters, including 10 billion employed per token, balancing the performance of M2.1 with efficiency.

While the architecture’s core remains compact and efficient, M2.1’s learning and design concentrate on multilingual programming proficiency as well as extended execution of the toolchain and full-stack development of applications, which makes it ideal for individual developers as well as enterprise workflows.

MiniMax M2.1: Major Improvements Over M2

MiniMax M2.1 offers several key enhancements over the previous version:

1. Enhanced Multilingual Programming

While the majority of AI modeling models favor only a small range of language options, M2.1 extends support to include Rust, Java, C++, Kotlin, TypeScript, JavaScript, Objective-C, and more. This wide language coverage allows it to support a variety of codes and full-stack projects.

2. Full-Stack development and Aesthetics

M2.1 enhances app and web development capabilities, delivering stunning and functionally robust outputs for user interfaces that are complex, as well as 3D scene simulations, mobile apps for Android, and iOS platforms.

3. Composite Task Handling

With improved Interleaved Thinking capabilities, M2.1 handles multi-step instructions and integrated execution more effectively, allowing it to tackle multi-step logic challenges, complicated code tasks, and real-world office scenario instructions.

4. Faster, More Effective Responses

Compared to M2, the new model provides more precise and efficient outputs. It also reduces the amount of tokens and latency, and is an advantage in high-throughput workflows, such as ongoing integration, or for interactive code sessions.

5. Better Agent/Tool Integration

M2.1 is a substantial generalization of agents and coding tools like Claude Code, Droid, Cline, Kilo Code, Roo Code, and BlackBox, with better control of context and consistent results across different tool calls.

6. Improved Natural Language Capabilities

Beyond programming, the model provides well-structured responses to documentation, technical writing, and general conversation, which makes it more adaptable to support developer tasks.

MiniMax M2.1 Benchmark Performance

Performance tests play a significant role in positioning MiniMax M2.1 as one of the top AI modeling models for coding:

| Benchmark Name | Category / Focus Area | MiniMax M2.1 Score | What the Benchmark Measures | Key Takeaway |

|---|---|---|---|---|

| SWE-Multilingual | Software Engineering (Multilingual Coding) | 72.5% | Real-world software engineering tasks across multiple programming languages | Demonstrates strong multilingual coding ability competitive with top proprietary models |

| VIBE-bench (open-sourced) | Full-stack & Agentic Execution | 88.6% | End-to-end application building, including UI, logic, tool use, and execution | Shows exceptional performance in real-world, agent-driven workflows |

Benchmark Analysis (What These Numbers Actually Mean)

SWE-Multilingual (72.5%)

- This benchmark evaluates practical coding ability, not just code completion.

- Tasks span multiple programming languages, making it more demanding than single-language coding tests.

- A 72.5% score places MiniMax M2.1 among state-of-the-art coding models, especially notable for an open-source release.

VIBE-bench (88.6%)

VIBE focuses on execution-level intelligence, not just reasoning.

Tests include:

- Web and UI development

- Interactive simulations

- Mobile and backend workflows

- Tool and agent coordination

An 88.6% average indicates production-ready agentic capability, a key requirement for AI-native organizations.

MiniMax M2.1: Real-World Applications

MiniMax M2.1’s increased capabilities bring tangible benefits across a variety of areas:

1. Coding and Development

From creating and optimizing code to debugging and reviewing across a variety of different programming languages. M2.1 improves the productivity of developers and decreases manual effort.

2. Agentic Workflows as well as Automation

This model’s management of complicated toolchains — browsers, shells, and interpreters for code — allows complete workflows, which combine operations and coding tasks with little human involvement.

3. User Interface and interactive Experiences

With demonstrated proficiency with 3D interactive applications as well as with a responsive UI design and cross-platform mobile apps, M2.1 is well-suited to projects that require aesthetic as well as functional execution.

4. Technical Documentation and Writing

Beyond the coding aspect, M2.1 offers improved natural language output for technical explanations, documentation, and well-structured responses that facilitate collaboration between engineering teams.

MiniMax M2.1: Price and accessibility

In the context of an open-source model, MiniMax M2.1 is available to the community of developers, with APIs and platform access offered by MiniMax. The earlier versions of M2 were praised for having significantly lower operating costs and speedier inference compared to similar models from proprietary sources, suggesting that M2.1 is continuing the style of cost efficiency.

This lower cost of entry allows experimentation, implementation within production systems, and local hosting, making the most powerful AI capabilities available without the licensing costs associated with closed-source solutions.

Final Thoughts

MiniMax M2.1 is a significant milestone for open source AI for both coding and agents in applications. By extending the support for multilingual programming, improving integration with tools, and producing impressive benchmark results, MiniMax M2.1 is an appealing option to models that are closed, especially for organisations and developers who value transparency, efficiency, and performance in real-world conditions.

As AI increases its speed in automated workflows and productivity tools that are designed for developers, models like M2.1 enable broader access to the latest technology while providing flexible workflows that can be scalable.

Frequently Answered Questions

1. What differentiates MiniMax M2.1 in contrast to MiniMax M2?

MiniMax M2.1 improves upon M2 by offering greater multilingual coding support as well as faster and more precise outputs, enhanced integration between agents and tools, and better performance in benchmarks such as VIBE or SWE-Bench Multilingual.

2. Are MiniMax M2.1 actually open source?

Yes, MiniMax M2.1 is available as an open source model, which allows developers to modify, access, and then use the model without licensing limitations.

3. How do you think M2.1 does on the benchmarks of coding?

On benchmarks for programming, M2.1 scores 72.5% on the SWE-Bench Multilingual test. It achieves an 88.6 percent average in the VIBE full-stack benchmark, which demonstrates its ability to handle a variety of development tasks.

4. Can M2.1 be utilized in real-time agent workflows?

Absolutely, M2.1 can be optimized for agentic workflows that handle the multi-step toolchains as well as shells, browsers, and code interpreters, making it ideal for continuous and automated AI process workflows.

5. What programming languages does M2.1 support?

M2.1 provides enhanced support for the full range of languages, including Rust, Java, C++, Kotlin, TypeScript, JavaScript, and more, which covers both application and system programming contexts.

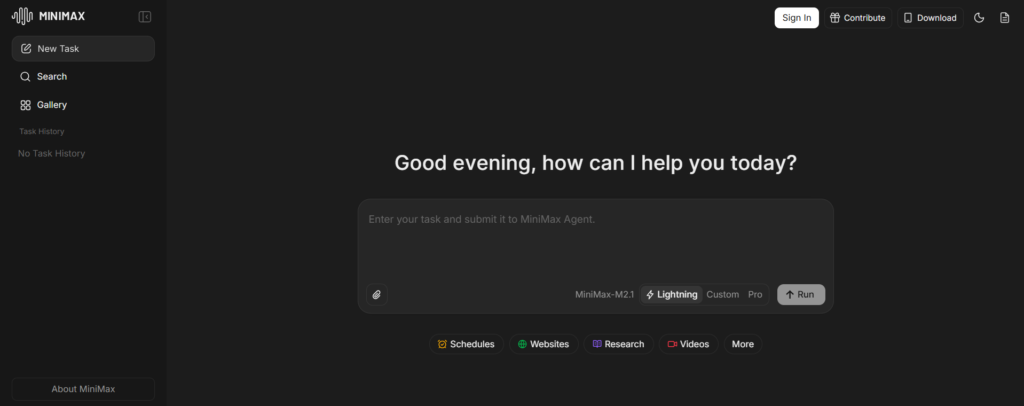

6. Where can developers get access to MiniMax M2.1?

Developers have access to M2.1 through MiniMax’s Open Platform and APIs, which include integration options with the most popular programming tools, along with AI Infrastructure services.

Also Read –

MiniMax Voices on Retell AI: Real-Time AI Text-to-Speech

MiniMax AGI: How Alibaba Cloud Powers Next-Gen AI Innovation

Help us evaluating this post 👀