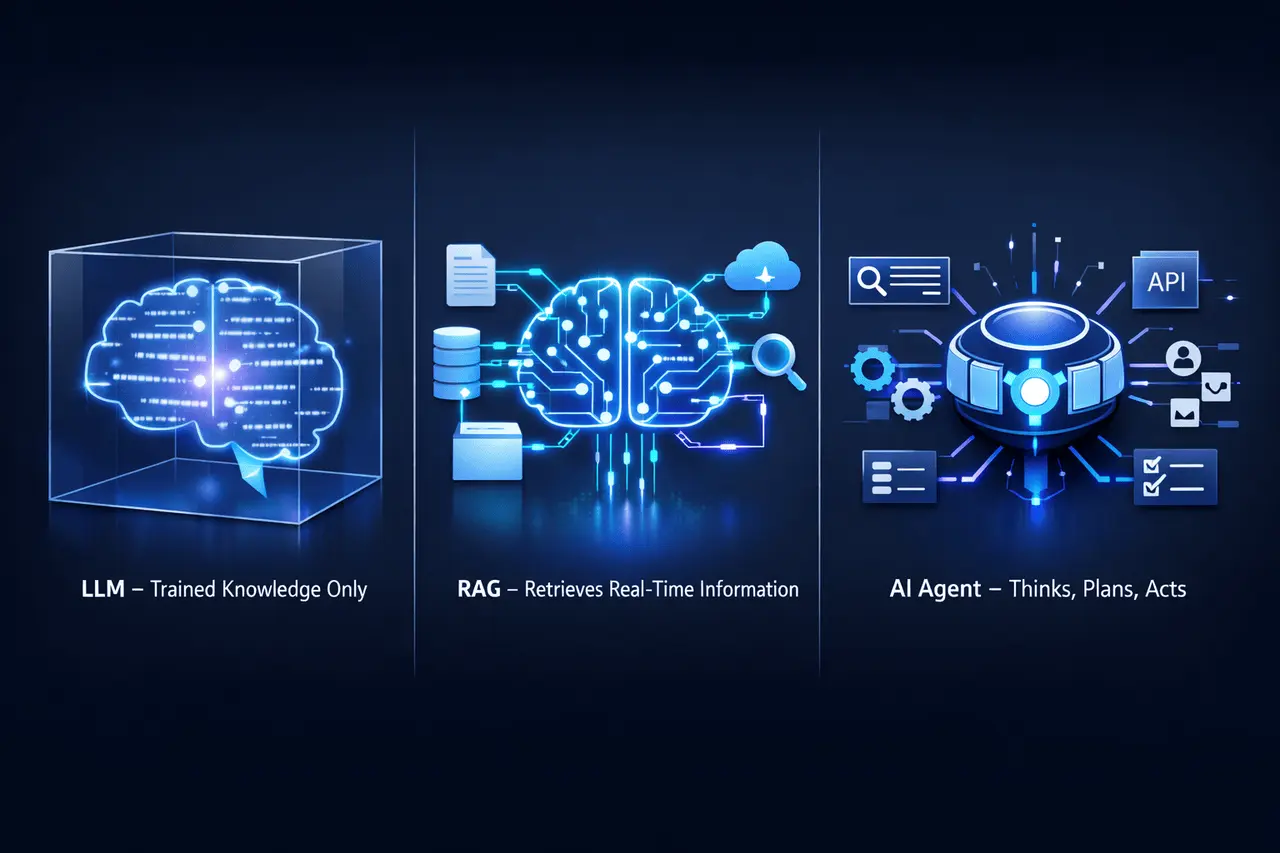

As AI is more integrated into workflows, products and decision-making systems, knowing how various AI architectures function is no longer merely a technical issue but a strategic one. Concepts such as LLMs or RAGs AI agents are frequently used interchangeably; however, they’re fundamentally different ways of building intelligent systems. Each level addresses a distinct weakness of the previous, which ranges from static information and hallucinations to the inability to reason or plan actions independently. Understanding these distinctions helps teams choose the appropriate architecture that will ensure the accuracy, scalability and actual-world reliability instead of making beliefs about AI’s capabilities.

In this article, we will explore LLM vs RAG vs AI Agents, explaining their core differences, capabilities, limitations, and real-world use cases.

Understanding LLMs, RAG, and AI Agents

Contemporary AI systems are usually described with terms such as LLM RAG, LLM, and AI agent. Although these terms are similar, they are different levels of capability as well as architecture. Understanding the distinctions is critical for anyone building, deploying, or evaluating AI solutions–especially in enterprise, research, or product contexts.

On a broad level, the two approaches differ in how they obtain information, the way they think about it, and what steps they are able to perform. These differences directly impact accuracy, reliability, and the suitability of these approaches for use in real-world scenarios.

What Is an LLM?

The Large Language Model (LLM) is a neural network that has been trained on vast amounts of text to generate and predict language. Models developed by companies like OpenAI and others in the AI ecosystem.

Core Characteristics of LLMs

- Knowledge is Fixed after Training: The understanding of an LLM’s global world has been frozen by the moment its training is over. It does not necessarily “know” the events and updates or information later released.

- Reasoning based on Patterns: LLMs create responses by identifying patterns within language rather than verifying information with external resources.

- Strong general Intelligence: They excel in summarisation, and explanation helps with coding, translation and writing that is creative within the confines of their training information.

The Hallucination Problem

If an LLM does not contain relevant information, however, it can still generate rapid and accurate responses that are inaccurate or manufactured, a phenomenon called hallucination. This isn’t deliberate deception and is a consequence of a probabilistic generation of text without being grounded with real-time information.

LLMs on their own are suitable for:

- Conceptual explanations

- Drafting and brainstorming

- Tasks where absolute accuracy is not crucial.

What Is Retrieval-Augmented Generation (RAG)?

Auxiliary Retrieval Generation (RAG) improves the performance of an LLM by permitting it to obtain information from other sources in real-time and then use that information to guide its responses.

In short: RAG = LLM + search + context injection.

How RAG Works?

- A user asks a question.

- The system pulls relevant documents from the database, the knowledge base, and an index of search.

- The content retrieved is then passed on to the LLM in the context.

- The LLM creates an answer that is rooted in the information retrieved.

Why RAG Matters?

- Up-to-date Information: RAG systems are able to access current documents, policies or internal information without having to retrain this model.

- Lowered Hallucinations: By establishing the basis of responses in the sources that are retrieved, RAG significantly improves factual accuracy.

- Enterprise Readiness: RAG allows AI systems to use private data, such as contracts, manuals, and support tickets.

LLM vs RAG vs AI Agents: Limitations of RAG

RAG isn’t 100% reliable. Hallucinations can occur even if:

- The retrieval process results in low-quality or irrelevant files.

- The model doesn’t consider or fails to integrate the context that is retrieved. data context

- The data source is not up-to-date or insufficient.

RAG technologies are commonly used with orchestration frameworks such as LangChain that speed up retrieval, prompting, and the management of context.

What Is an AI Agent?

An AI agent is a new development. Instead of responding only to requests, a computer can determine what is best to do next to accomplish an objective.

In simplified terms: Agent = LLM + reasoning + tools + decision-making.

LLM vs RAG vs AI Agents: Key Capabilities of AI Agents

- A Goal-Oriented behaviour: Agents break down complex goals into smaller pieces and perform them in a sequence.

- Tool Usage: They can select and use tools like web search databases, calculators, calculator APIs or environments for code execution.

- Multi-step Reasoning: Agents assess intermediate results and alter their actions in line with the results.

- Optional Memory: Certain agents save prior steps, decisions or preferences of users to ensure continuity and improve performance.

LLM vs RAG vs AI Agents: Example Agent Tasks

- The process of researching a subject to verify facts and writing a report

- Monitoring an entire system, detecting abnormalities and triggering alerts

- Automating workflows across multiple applications

Contrary to RAG, which is focused on retrieving information, agents concentrate on controlling flow and taking action.

LLM vs RAG vs AI Agents: Choosing the Right Approach

Making a choice from the LLM, RAG system, or agent will depend on the requirements you have:

- Make use of an LLM when you require speedy, inexpensive language generation that isn’t shackled by exact accuracy limitations.

- Utilise RAG if precision, traceability, and access to private or updated information are essential.

- Make use of the AI agent when the task involves planning, making decisions, and interacting with systems or tools.

In numerous settings, production techniques have been mixed using agents with RAG-enabled retrieval as one of the many tools.

Why This Distinction Matters?

An incorrect understanding of these terms can lead to unreasonable expectations. A lot of AI problems do not occur because models aren’t strong; however, they happen because of the wrong technology employed for the task.

- The expectation of an LLM to be up-to-date with current information causes hallucinations.

- Aiming for RAG to think profoundly and without orchestration can lead to sloppy solutions.

- The expectation that agents be safe and free of constraints leads to unpredictable results.

A clear architectural choice is the foundation of a secure AI system.

Final Thoughts

LLMs, RAG, and AI agents provide a range of capabilities rather than competing concepts. Each builds upon the previous layer to overcome real-world limitations and limitations of static knowledge, hallucinations and the absence of agency.

As AI systems develop, the majority of applications that are practical will be based on hybrid architectures which combine language retrieval systems, language models, and control by agents. Understanding the function of every element isn’t an option. It’s crucial to build AI that is actually effective.

Frequently Asked Questions (FAQs)

1. Can RAG completely eradicate hallucinations?

No. RAG significantly reduces hallucinations through the grounding of responses in data retrieved. However, poor retrieval or poor prompt design may cause mistakes.

2. Do AI agents use RAG?

But not always. Agents might make use of RAG as a tool, but it is just one among many; they may depend on APIs, databases or internal logic based on the job.

3. Is an AI agent more potent than an LLM?

An agent isn’t necessarily “smarter,” but it is more efficient due to the fact that it is able to plan, utilise tools, and perform actions beyond the generation of text.

4. Does the use of RAG need to be retrained in the model?

No. RAG operates without retraining by providing retrieved data during inference time.

5. Are AI agents safe for production use?

They could be, however, only with solid safeguards, monitoring, and explicit tool permissions.

6. Which approach is best for enterprise applications?

Many companies benefit from RAG-enabled technology or controlled agents, based on whether the primary concern is precision or automation.