Google is planning to enter the wearable technology market with the first generation of smart glasses powered by AI, to be released in 2026. Contrary to earlier efforts in this area, this latest initiative is closely integrated with the company’s larger XR strategy and constantly evolving AI ecosystem. With Android XR and powered by advanced multimodal intelligence, the new glasses are designed to provide an efficient, hands-free service by leveraging both display- and audio-driven models.

As consumer interest in AI wearables grows, this announcement signals Google’s determination to shape the future of personal computing by integrating artificial intelligence seamlessly into daily life.

In this article, we explore Google AI smart glasses, including their 2026 launch timeline, key features, expected capabilities, and the impact they could have on the future of wearable AI technology.

Google’s Return to Smart Glasses

After a series of unsuccessful efforts to develop wearable eyewear, the tech giant is planning an impressive return. Recent announcements indicate that the first smart glasses powered by AI are scheduled to be available in 2026. It will also mark the return of the realm of extended reality (XR) and wearable AI devices. The company is looking to make the most of the power of its AI and mobile ecosystem, rather than repeating past mistakes.

What We Know: Two Kinds of Glasses for AI?

The glasses to come, which are part of an idea known as Android XR, will come in two distinct versions:

- Audio-first (screenless) glasses with a camera, microphone, and speakers. With microphones, speakers, and cameras, designed to allow users to interact with the AI assistant of the company and take pictures, get real-time help, and carry out other tasks through computer vision and audio.

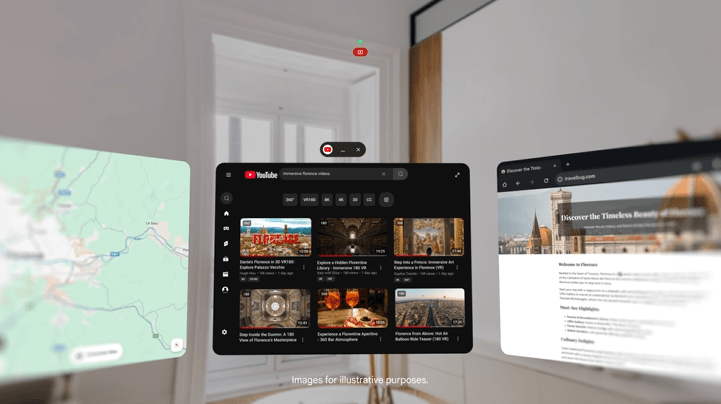

- Display-enabled glasses include an in-lens display that shows relevant visual information, navigational directions, live translation captions for notifications, and other AI-driven content private to the wearer.

Both models are designed to connect to a smartphone for processing power rather than being completely independent devices.

Platform and Ecosystem underpinning the Glasses

The new glasses aren’t designed to be developed as a stand-alone product. They will run Android XR, the same platform that was recently introduced for XR glasses, allowing users to access a complete set of apps and services.

One of the gadgets that was showcased in this campaign will be Project Aura, developed in collaboration with a hardware partner. Aura is said to function as “wired glasses that XR can see through,” resembling sleek sunglasses but paired with a smaller device that includes a battery and a trackpad. It has a small field of view (about 70°) and optical see-through, allowing digital overlays to be added to the user’s view.

The main benefit of this platform-based strategy is that any XR application, such as glasses, will be compatible with these glasses without having to be rewritten. This dramatically reduces barriers to adoption for developers and improves device performance from the beginning.

Even users of iOS devices (iPhone) can use the glasses, provided they install the mobile companion application. Google hopes to extend this beyond its own Android users.

What’s causing Google’s Move back into Wearables?

A variety of strategic motives drive the resurgence of this initiative:

- Innovations in AI and Optics: Advances in generative AI (for tasks such as live translation, context-aware assistance, or image recognition) make today’s eyewear more effective than earlier-generation models. Modern optical-see-through and microLED technologies have also improved, with brighter displays and higher power efficiency.

- Synergy with the Existing Ecosystem: The existing ecosystem is synergistic. Android XR is built by Google (in collaboration with hardware partners). The glasses naturally integrate with its vast product range, smartphone ecosystem services, and apps, helping combat the fragmentation that has plagued previous smart-glass attempts.

- Opportunities in the Market and Competition: Other major players are offering headsets or smart glasses. This timing coincides with the increasing consumer and corporate demand for wearable devices. The launch of the product now allows Google to be more competitive in the rapidly evolving AI Wearable technology market.

What’s Unclear (for the moment)?

Despite the 2026 launch date and the preliminary technical previews, a few specifics remain ambiguous:

- Form Factors and Final Design: The glasses we have seen so far are just prototypes. The final design for retail (shape, size, weight, comfort, and full optical quality) could change.

- Prices and Availabilities: No pricing or schedule for market rollouts has been released yet.

- Performance and Battery Life: Given that processing is delegated to smartphones, real-world experience, performance, battery consumption, latency, and limitations for use cases have not yet been tested at a larger scale.

- Security and Privacy: With any wearable device that has sensors and cameras always on, there are privacy concerns to be anticipated. Google hasn’t yet officially provided a comprehensive privacy or data-handling policy for the glasses.

Google AI Smart Glasses: Implications for Consumers and Enterprises

For Consumers

- Hands-free Comfort: With audio-first glasses, users can communicate with AI assistants without reaching for their phones. It allows for tasks like taking photos, receiving instant answers to questions about the surrounding environment, and voice commands helpful during cooking, travel, and commuting.

- Augmented Reality for Mobile: Display glasses with navigation instructions, translations, or live captions may prove helpful in learning, travel, or other complex tasks where hands-free guidance is beneficial.

- Appearance across Platforms: Since the glasses are designed to function regardless of whether you have an Android handset or an iPhone (via app companions), a wide variety of users can use them.

For Professionals and Business

- Efficiency Remote work and Productivity: Wearable XR may provide a portable workspace. Imagine multitasking, navigating maps or documents while travelling, or getting real-time directions when working.

- Field and Training: For roles that require unrestricted access to information and logistics, maintenance, healthcare, and more. Smart glasses could offer significant productivity gains.

- Ecosystem Leverage: Businesses that have invested in Android infrastructure could find onboarding XR devices simpler due to Android XR’s compatibility.

Google AI smart glasses: Contests, and Things to Watch

Despite the promises, the adoption of smart glasses has traditionally been slow due to high prices and designs, social acceptance, and a lack of compelling applications. To ensure that the next generation of smart glasses is successful, companies (including Google) must deliver on battery life, comfort, and clear value and privacy protections. This endeavour will also depend on the timely availability of developer tools and a robust app ecosystem to avoid becoming a victim of the “empty platform” issue.

In addition, consumer acceptance often depends on cost, battery life, and the device’s real-world value beyond novelty. Google is likely to have to find a balance between advanced features, affordability, and practicality.

Final Thoughts

The return of Google’s smart glasses is an essential moment in wearable AI. With significant advancements in battery efficiency, optics, and multimodality, Google is more positioned than ever before to offer devices that go beyond novelty. The 2026 models, which include screenless audio-first spectacles and in-lens display models, could transform how people navigate information, interact with digital environments, and be productive while on the move.

However, the long-term viability of these glasses depends on overcoming the most common issues, such as comfort, price security, privacy, and the creation of a cross-platform, robust app ecosystem. If Google does its job well, its new AI glasses could become among the most significant advancements towards mainstream augmented reality and daily AI integration.

FAQs

1. When will Google’s AI smart glasses be made accessible?

The company has announced the 2026 launch date for the first generation of AI glasses, which include display-enabled and audio-first versions.

2. What are the features these glasses will come with at the time of launch?

The expected features include hands-free AI interactivity (voice and camera) and photo capture. Contextual-aware live translation captions, as well as notifications through the in-lens display (for models that have display capability).

3. Do I require an iPhone to wear the glasses?

Yes, the glasses have been made to work with a smartphone for processing, because they’re not entirely independent devices.

4. Will the glasses work on iPhone or just Android phones?

Based on the most recent images, the glasses, created under the name Project Aura, will support iOS devices and include an accompanying app that lets iPhone users use the complete set of features.

5. What kinds of usage cases could make use of these spectacles?

The practical applications include hands-free navigation, live translation, voice-controlled AI assistance, mobile photography with real-time context-aware support, portable workspaces for professionals, and hands-free productivity tools.

6. What’s left to be decided regarding glasses of today?

The most critical questions are: the final design, retail pricing, battery endurance, performance in real-world conditions, app environment maturation, and privacy or data-handling policies.