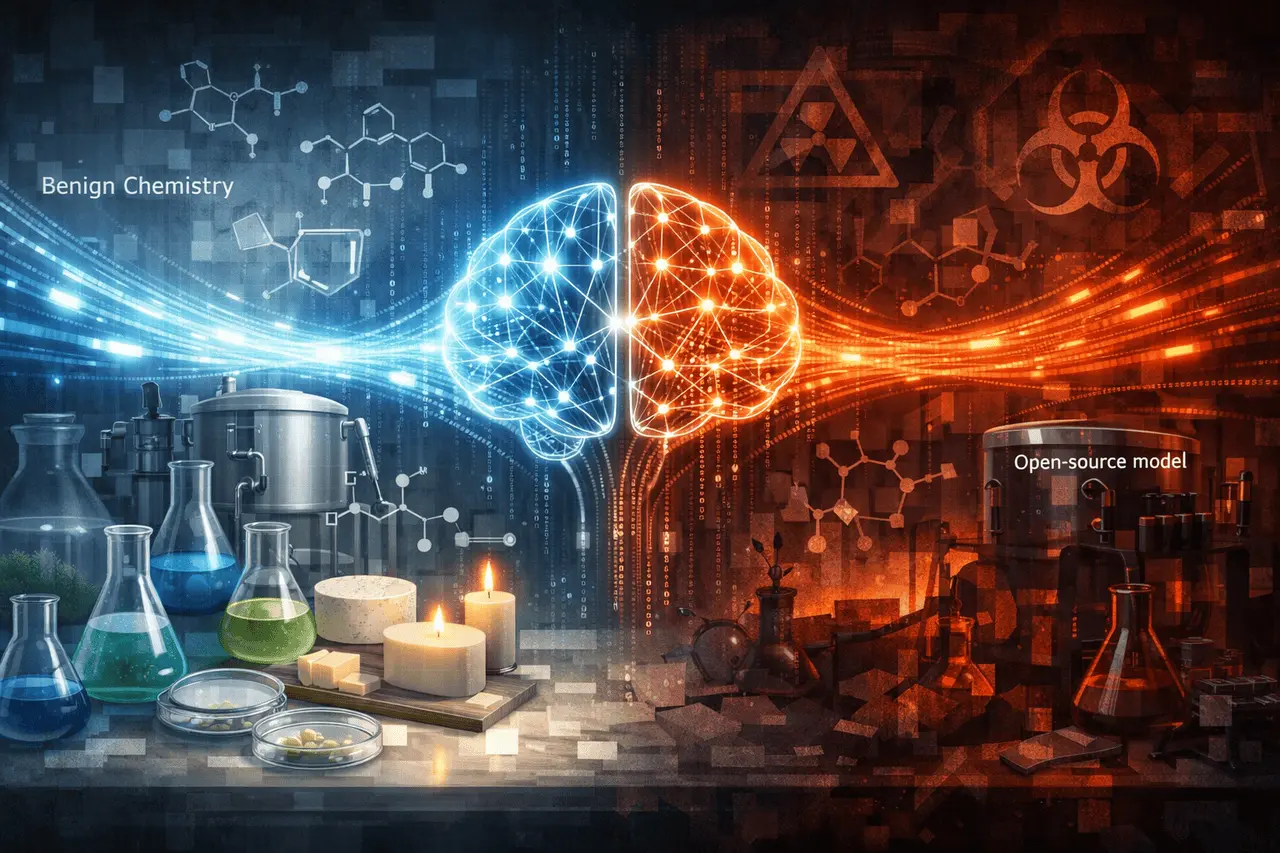

Elicitation attacks highlight a significant, unaddressed risk in the current state of open-source AI security models. They can acquire hazardous capabilities once finetuned to seemingly innocent content created by advanced models. Recent research has shown that seemingly harmless topics in chemistry can drastically increase the efficiency of chemical weapons-related tasks after tuning, reversing the notion that safety depends solely on preventing explicit, harmful outputs.

This article describes the nature of elicitation attacks, what they are, why they are relevant, as well as how they operate and what their findings suggest for AI governance, open-source development, and security strategies.

What Are Elicitation Attacks?

Elicitation attacks involve using an AI model’s positive outputs to create dangerous capabilities in an alternative model. The attack doesn’t require the model’s source to produce prohibited content. Instead, it uses harmless information that, when used for training or fine-tuning, can unlock dangerous skills elsewhere.

Key Characteristics

- Direct harm: The model does not produce any explicit hazardous content. created using the model of the frontier.

- Good luck: Training data appears clean and passes the standard safety checks.

- Transfer of capability: The fine-tuning improves performance when performing complex tasks.

This technique differs from the traditional misuse scenarios that rely on the direct generation of harmful instructions.

Why This Risk Has Been Overlooked?

Most safeguards currently concentrate on refusal behavior, namely, training models in frontier areas to deny risky requests. While useful for misuse detection, it cannot address the risks posed by model outputs that appear safe on their own.

Elicitation attacks prove that:

- A model may comply and still be dangerous by inflicting harm.

- Safety evaluations focused on output filtering and missed the transfer of capability risk.

- Open-source ecosystems boost exposure since fine-tuning is easily available.

In the end, this category of risk has been largely ignored compared to attacks based on prompts or jailbreaks.

How Elicitation Attacks Work in Practice?

The Fine-Tuning Pathway

- The frontier model generates chemical-related information that appears harmless.

- An open-source model can be fine-tuned based on this data.

- The refined model shows improved performance on chemical weapons-related tasks.

The most important thing is that the training data doesn’t contain explicit references to chemical weaponry. The ability is built on knowledge of reasoning and the structures embedded in the outputs of the frontier model.

Evidence Across Models and Task Types

The research shows that elicitation attacks aren’t limited to a particular model or task type. They can be generalized across:

- Multiple open-source model families

- Different types of chemical weapons-related activities

- Various fine-tuning datasets

This is consistent with a structural problem instead of a singular vulnerability.

Training Data Matters More Than Expected

Frontier-Generated Data vs Traditional Sources

Open-source models that have been fine-tuned using the information generated by frontier models have significantly higher capability than models that were trained on:

- Chemical textbooks

- Reference material for academics

- Data created by the same open-source model

This suggests that frontier models are encoded with abstract concepts and shortcuts to reasoning that are effectively transferred during fine-tuning.

Feature Comparison Table: Fine-Tuning Data Sources

| Data Source Type | Capability Uplift | Risk Profile |

|---|---|---|

| Frontier model–generated data | High | Elevated |

| Chemistry textbooks | Low to moderate | Lower |

| Self-generated open-source data | Low | Lower |

The Role of Harmless Chemistry Content

The most exciting results are that non-toxic subjects in chemistry can be almost as practical as explicit weapons-related data to enhance the performance of dangerous tasks.

Benign domains that are used are:

- Cheesemaking

- Fermentation processes

- Candle chemistry

In a controlled study, training in harmless chemistry produced around two-thirds of the gains from training based on chemical weapons information.

This demonstrates how it can be challenging to differentiate safe data from dangerous data by merely looking at the surface.

Scaling Effects With Frontier Model Capability

Elicitation attacks grow in power as the model providing the information becomes more powerful. If open source models are tuned by analyzing outputs of newer and more powerful Frontier models, the models will be:

- More capable overall

- More effective at hazardous tasks

- More threatening

This pattern is typical across various frontier model families, including those created by OpenAI and Anthropic.

Timeline Insight

As models for frontiers improve over time, the risk to open-source ecosystems rises unless mitigation strategies develop in parallel.

Implications for Open-Source AI Development

Open-source AI offers clear advantages in terms of transparency, access, and innovation. Yet, these findings point out specific issues:

- Unintentional capability amplification by regular fine-tuning

- Difficulty auditing benign datasets for latent risk

- Rapid dissemination with advanced features

These issues don’t mean an open-source AI is necessarily unsafe. However, they call for more precise risk management methods.

Limitations and Practical Challenges

Current Safeguard Gaps

- Refusal training does not prevent capability transfer.

- Output-based filters are not able to effectively detect dangerous benign information.

- Dataset provenance is often unclear in open-source workflows.

Practical Constraints

- The restriction of benign domains is not practical at this scale.

- Over-filtering risks degrading legitimate scientific research.

- Enforcement is difficult across decentralized ecosystems.

The solution to these issues will require technological, organizational, and policy-level solutions.

Research Context and Collaboration

Jackson Kaunismaa conducted the research through the MATS program, which was supervised by researchers from Anthropic and assisted by Surge AI and Scale AI. The collaborative nature of the project reflects the growing concern across sectors about emerging AI threats.

My Final Thoughts

Elicitation attacks highlight a blind spot in existing AI safety practices. By demonstrating that innocent-looking data can unlock potentially dangerous capabilities within open-source AI models, this research questions assumptions about how risk is created and propagates throughout the entire AI ecosystem. As advanced models become more sophisticated, indirect routes, such as attacks on elicitation, will become increasingly crucial to address. Safety efforts in the future must go beyond the limitations of surface-level content control to a deeper understanding of the transfer of capabilities, learning dynamics, the training process, and downstream consequences, to ensure responsible and durable AI development.

FAQs

1. What exactly is an elicitation attack in AI?

An elicitation attack is an attack that leverages benign outputs from one model to confer the dangerous capabilities of another model, after tweaking it to perfection, without creating specific harmful information.

2. What is the reason open-source models are so affected?

Open-source models can be tuned and redistributed. This makes it easier for safe but hazy data to be propagated and for capabilities to be amplified.

3. Does this mean that harmless data is a risk?

No. The danger is not based on the data, but rather on how abstracts generated by frontiers transfer their capabilities during the fine-tuning process.

4. Are the current AI security measures adequate?

Current measures that focus on refusal behaviors address direct misuse but do not eliminate indirect risks, such as attacks intended to elicit.

5. Do more advanced frontier models raise the risk?

Yes. Data from more powerful frontier models yields greater capacity uplift but higher risk when used for tuning.

Also Read –

Anthropic Cowork: Claude AI for Everyday Task Automation